The TRACE RERC is a research and development center at the University of Maryland, College Park. It is part of the College of Information and is funded by NIDILRR (the National Institute on Disability, Independent Living, and Rehabilitation Research).

Our mission at the Trace Research and Development Center is to capitalize on the potential that technologies hold for people experiencing barriers due to disability, aging, or digital literacy, and to prevent emerging technologies from creating new barriers for these individuals. In doing this, we bring together disciplines such as information science, computer science, engineering, disability studies, law, and public policy. We engage in research, development, tech transfer, education, policy, and advocacy.

Our vision is a world that is accessible and usable by people of all ages and all abilities – each experiencing information and technologies in a way that they can understand and use.

Existing AI technologies are increasing in number faster than assistive technologies, which leaves some people with disabilities behind. How can we incorporate individuals with disabilities when forming datasets?

Our technology-focused society often leaves out people who are unable to learn skills such as opening a browser, emailing, etc. How do older adults feel about technology and the constant changes that are being made?

With a rise in touchscreen-based kiosks in public places, recreation of accessible kiosks is necessary, especially for individuals who need assistive technologies, such as screen readers, to read content. How can kiosks be designed to incorporate features for blind and low-vision users?

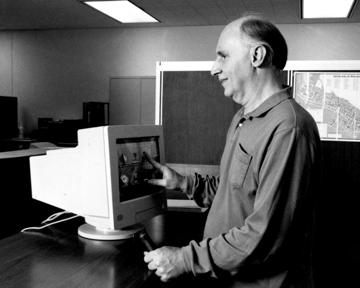

Morphic is an application that makes computers more accessible by providing accessibility and usability features (such as large text or color contrast) that can be used on any computer or device that has Morphic installed.

Photosensitive Epilepsy is a condition in which a person is susceptible to seizures when exposed to content (such as videos, movies, and games) with specific features, like flashing lights or visual patterns. PEAT, or Photosensitive Epilepsy Analysis Tool, is a software program that can be used to scan content for sensitive material to help creators release content that can be enjoyed by all.

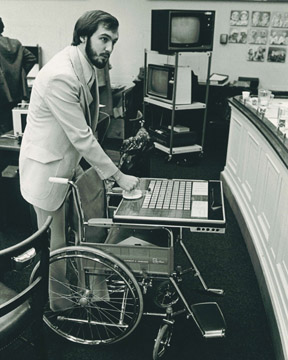

EZ Access is a set of interface enhancements that can make transaction machines and kiosks more accessible to people with disabilities, especially those who are Blind or Low Vision. It involves a tactile keypad with raised buttons that users can feel and press.

The Trace R&D Center was formed in 1971 by Gregg Vanderheiden. Since then, it has developed numerous technologies and tools that help people with varying abilities.