Teachable Interfaces

How can users with disabilities train technology to better meet their needs?

This research project is funded by Inclusive Information and Communications Technology RERC (90REGE0008) from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR), Administration for Community Living (ACL), Department of Health and Human Services (HHS). Learn more about the work of the Inclusive ICT RERC.

Project Team: Hernisa Kacorri (Lead); Kyungjun Lee and Jonggi Hong (Students)

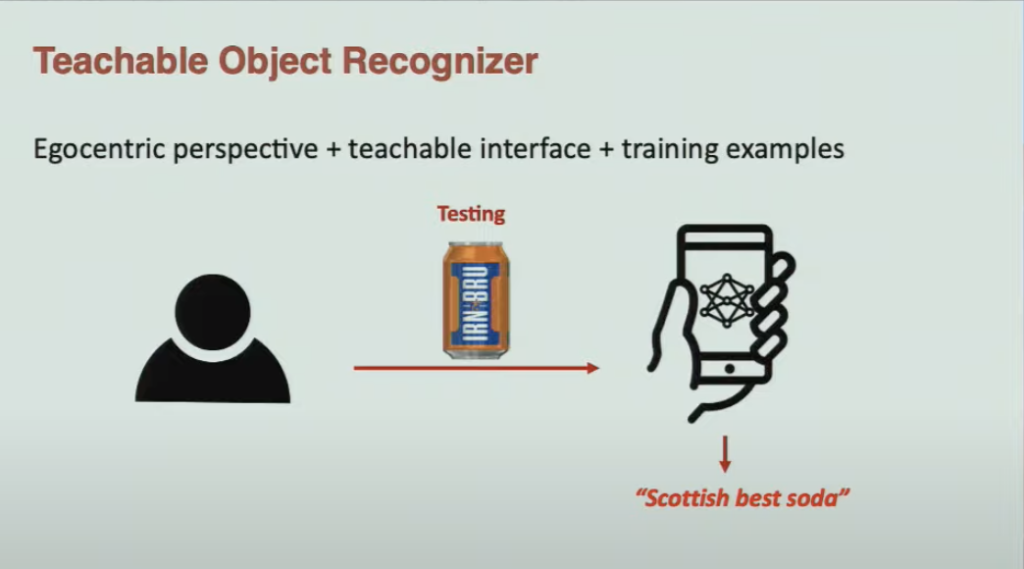

- Teachable Object Recognizer

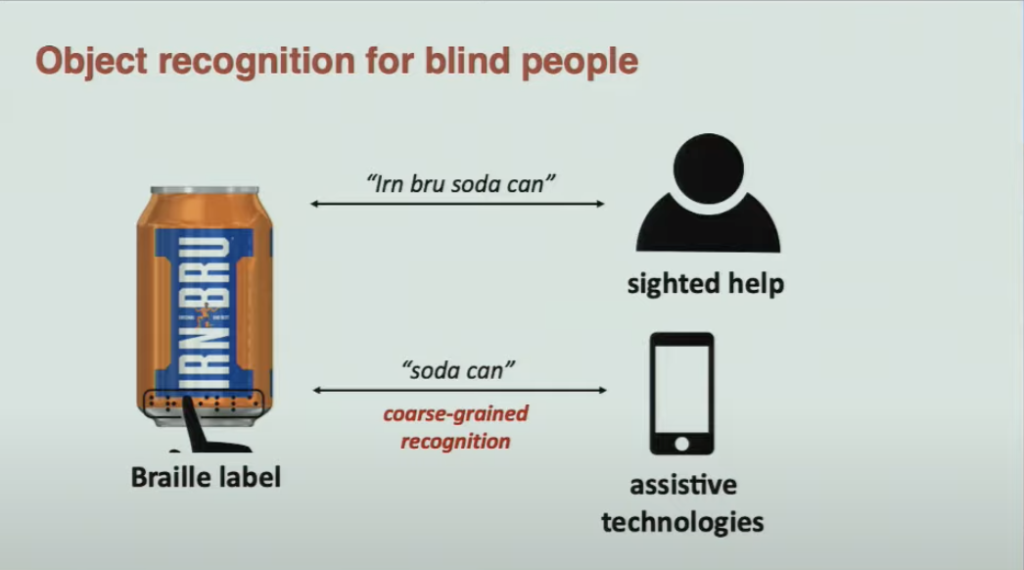

- Object recognition for blind people:

This project explores ways that existing recognizer technology (depicted in the slides above) can go beyond single items to handling items that appear in groups, such as those in a shopping setting. Slides are from a CHI 2019 presentation of “Hands Holding Clues for Object Recognition in Teachable Machines” by Kyungjun Lee and Hernisa Kacorri.

Potential impact of this project on the lives of people with disabilities

The ability to identify everyday objects can be one of the daily challenges that people with visual impairments face — particularly when trying to distinguish objects of different flavors, brands, or other specific characteristics, or to identify individuals in the environment.

Using camera-based technologies like smart glasses or mobile phone apps with teachable object recognizers can be a solution, but there are a number of challenges that must be overcome, including proper aiming of the camera and privacy concerns of other people in the environment.

This project will help make the following outcomes possible:

- Blind users being able to personalize their mobile phone or smart glasses to recognize objects or people of interest in their surroundings by first providing a few photos so that the artificial intelligence (AI) can recognize them later through the camera.

- Privacy issues related to AI-powered cameras being addressed in ways that consider the perspectives of both the blind users and the nearby people whose faces or objects might be captured.

Definitions

- Artificial intelligence (AI): “the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.” (From Oxford Languages via Google)

- Teachable object recognizers are AI tools that learn from the users who will be using them – so that they are more accurate when used by those same users – who may aim cameras, hold objects or otherwise use the devices in a different manner than object recognizers are usually trained.

- Smart glasses are wearable glasses that include computer technology.

PUBLICATIONS

- Hong, J., Gandhi, J., Mensah, E. E., Zeraati, F. Z., Jarjue, E. H., Lee, K., & Kacorri, H. (2022). Blind users accessing their training images in teachable object recognizers. In Froehlich, J., Shinohara, K., & Ludi, S. (Eds.), ASSETS ‘ 22: The 24th International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1-18, No. 14). New York: ACM. https://doi.org/10.1145/3517428.3544824

- Lee, K., Hong, J., Jarjue, E., Essuah Mensah, E., & Kacorri, H. (2022). From the lab to people’s home: Lessons from accessing blind participants’ interactions via smart glasses in remote studies. In Proceedings of the 19th International Web for All Conference (W4A ’22). New York: ACM. DOI: https://doi.org/10.48550/arXiv.2203.04282

- Read the full paper (pdf).

- Learn more about this work: Covid-19 Pandemic Moves Research on Assistive Technologies From the Lab to People’s Homes

- Lee, K., Shrivastava, A., & Kacorri, H. (2021). Leveraging hand-object interactions in assistive egocentric vision. IEEE Transactions on Pattern Analysis and Machine Intelligence. DOI: https://doi.org/10.1109/TPAMI.2021.3123303 PMID: 34705636

- Lee, K., Sato, D., Asakawa, S., Asakawa, C., & Kacorri H. (2021). Accessing passersby proxemic signals through a head-worn camera: Opportunities and limitations for the Blind. In J. Lazar, J. H. Feng, & F. Hwang (Eds.), ASSETS ’21: The 23rd International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1-15, No. 8). New York: ACM. DOI: https://doi.org/10.1145/3441852.3471232 PMCID: PMC8855357

- Read the full paper.

- Summary (coming soon)

- View the presentation from ASSETS 2021.

- Lee, K., Shrivastava, A., & Kacorri, H. (2020). Hand-priming in object localization for assistive egocentric vision. The IEEE Winter Conference on Applications of Computer Vision (pp. 3422-3432). Piscataway, NJ: IEEE. DOI: https://doi.org/10.48550/arXiv.2002.12557 PMCID: PMC7423407

- Lee, K., Sato, D., Asakawa, S., Kacorri, H., & Asakawa, C. (2020). Pedestrian detection with wearable cameras for the blind: A two-way perspective. In CHI ‘20: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-12). New York: ACM. DOI: https://dx.doi.org/10.1145%2F3313831.3376398 PMCID: PMC7423406

- Read the full paper.

- Read a summary of this paper from NARIC, the National Rehabilitation Information Center.

- View presentation from CHI 2020.

- Lee, K., Hong, J., Pimento, S., Jarjue, E., & Kacorri, H. (2019). Revisiting blind photography in the context of teachable object recognizers. In ASSETS ’19: The 21st International ACM SIGACCESS Conference on Computers and Accessibility (pp. 83-95). New York: ACM. DOI: https://doi.org/10.1145/3308561.3353799 PMCID: PMC7415326

- Lee, K. and Kacorri, H. (2019). Hands holding clues for object recognition in teachable machines. In CHI ’19: CHI Conference on Human Factors in Computing Systems (pp. 1-12, Paper No. 336). New York: ACM. DOI: https://doi.org/10.1145/3290605.3300566 PMCID: PMC7008716

-

- Read the full paper.

- View presentation from CHI 2019.

- Download and learn more about TEgO, a Teachable Egocentric Objects Dataset.

-

PRESENTATIONS

Accessing Passersby Proxemic Signals through a Head-Worn Camera: Opportunities and Limitations for the Blind [ASSETS 2021]

Pedestrian Detection with Wearable Cameras for the Blind: A Two-way Perspective [CHI 2020]

Hands Holding Clues for Object Recognition in Teachable Machines [CHI 2019]

TEgO Dataset

The Teachable Egocentric Objects Dataset (TEgO) contains images of 19 distinct objects taken by two users (one blind and one sighted), both using a smartphone camera, for training and testing a teachable object recognizer. The dataset is available for download.